Artificial Intelligence has transformed the way many industries operate, and it has had a particularly significant impact on the human resources sector. As the influence of AI extends beyond the expected boundaries, stakes rise as well.

In this era, cyber threats are often identified and fixed after the damage has been done, and security compliance gaps are generally revealed only during audits. When AI systems fail, the consequences aren’t speculative.

They’re real, personal, and often irreversible. Cybersecurity teams, already trying their level best to keep up with the evolving threat landscape, are overwhelmed by alerts and manual tasks, which can slow response times and mask critical risks.

That’s why ServiceNow high-risk AI protection systems take a fundamentally different approach to testing AI, especially when the risk of harm is high. These ServiceNow AI risk management solutions help to provide more effective security operations. It contains dozens of cybersecurity tools that help prevent, detect, and remediate cyberattacks.

ServiceNow offers a high-risk AI testing strategy designed for trust, not just for compliance. It’s designed to capture what others miss and to adapt as technology and regulations evolve. In today’s blog, we will witness how ServiceNow protects high-risk AI systems with its advanced security orchestrations. So, let’s begin!

Understanding High-risk AI Systems

High-risk AI systems are AI applications that highly impact human rights, safety, and critical decision-making. These high-risk AI systems are mainly used in sectors such as healthcare diagnostics, employee hiring, financial services, law enforcement, and infrastructure management, where cyber threats, including errors, bias, or security breaches, can have severe consequences.

According to the ServiceNow AI accountability framework, which may include the EU AI Act and the NIST AI Risk Management Framework, high-risk AI systems commonly include one or more of the following characteristics:

- High-stakes outcomes

- Limited human oversight or explainability

- Vulnerability to bias or misuse

- Heavy reliance on sensitive data

- Integration into critical infrastructure

Given their greater impact, high-risk Artificial intelligence systems require more robust governance, ethical design, and regular data safety monitoring and transparency. It also helps in building trust. ServiceNow’s high-risk AI protection systems offer robust policies, regulatory compliance strategies, and technical security to address these requirements. This makes ServiceNow a reliable platform for enterprise-level AI.

ServiceNow’s Approach to Responsible AI

The ServiceNow service portal prioritizes responsible AI at every step of development and throughout the development process to build trust and accountability factors in its AI services. It includes maintaining a balance between innovation and ethical obligations, ServiceNow AI compliance, and user data safety, mainly in high-risk use cases.

Through cross-functional AI governance in ServiceNow, a dedicated AI ethics framework, and strong compliance culture, ServiceNow ensures that its AI technologies deliver value without compromising fairness, transparency, or human rights.

1. Defining Responsible AI

Responsible AI ServiceNow is a more comprehensive framework guiding the development, deployment, and governance of AI systems. The ServiceNow AI accountability framework keeps them:

- Legally compliant

- Ethically leveled

- Technically strong

- Aligned with human values

Responsible AI also deals with fairness, transparency, accountability, and compliance, along with ethics. It ensures that AI minimizes harm and serves the public good. Responsible AI considers the societal impact of AI and includes compliance and security practices to gain trust and align AI development with established values.

2. Ethical AI: Core Principles

Ethical AI is often seen as an integral component of responsible AI, focused specifically on the moral and ethical dimensions of AI development and use. Its Responsible AI framework is guided by several core principles, including Transparency, Fairness, and others.

Ethical AI is also helpful for IT Service Management, ensuring data privacy through ServiceNow AI compliance, data minimization, consent mechanisms, and the secure handling of sensitive information.

These principles are embedded into ServiceNow’s product development lifecycle and enforced through governance, training, and auditing processes to ensure AI is deployed safely, even in the most sensitive and high-risk environments.

Core principles of ethical AI also include accountability and security. Servicenow managed services by clearly defining roles and responsibilities, with human oversight integrated into the AI decision-making process. ServiceNow AI compliance protects high-risk AI systems from tampering, misuse, and adversarial attacks through robust security controls.

ServiceNow In-House vs Partner: AI Risk Management Impact

ServiceNow protects high-risk AI systems through various methods. When it comes to protecting high-risk AI systems, the process of implementing in-house vs through a certified partner can significantly influence the effectiveness of AI risk management.

- In-House Implementation: Protecting AI models with ServiceNow organizations that build and manage their ServiceNow AI capabilities internally often benefit from greater control and customization.

- Partner-led Implementation: Certified ServiceNow partners bring domain-specific expertise and best practices from multiple implementations. ServiceNow high-risk AI protection ensured through partner-led implementation is particularly beneficial for high-risk sectors like healthcare, finance, and government.

Whether in-house or partner-led, the ultimate impact on AI risk management depends on the governance maturity, security practices, and transparency standards embedded into the deployment strategy.

ServiceNow’s platform supports both models; what matters is how rigorously each path upholds responsible AI principles. The below table highlights the differences between ServiceNow in-house and ServiceNow partner approaches in the context of AI risk management:

| Criteria | ServiceNow In-House | ServiceNow Partner |

| Control Over AI Governance | High – full control over AI lifecycle and compliance | Moderate – depends on partner alignment with org policies |

| Customization & Flexibility | Extensive – tailored to specific internal risk frameworks | Standardized – based on partner’s implementation model |

| Speed of Deployment | Slower – due to internal development and validation cycles | Faster – partners use proven frameworks and accelerators |

| Expertise in AI Risk Management | Depends on in-house talent and training | High – experienced with diverse industries and regulations. |

| Compliance Readiness (EU AI, NIST) | Internal teams must stay up-to-date manually | Partners provide regulatory-aligned setups by default. |

| Cost | Potentially higher upfront (resource-heavy) | More predictable with predefined packages and SLAs. |

| Post-deployment Monitoring | Fully owned by internal teams | Often included as a managed service |

| Scalability | Slower unless skilled resources are available | Faster due to repeatable partner methodologies |

| Transparency & Accountability | High – all decisions and models are in-house | Shared – depends on contract and visibility into partner work |

Interested in a chatbot demo, pricing, or more info? Fill out the form our expert will contact you shortly.

-

Chatbot Demo

-

Cost to Develop an app

-

Industry Report

-

Case Study

ServiceNow vs SAP: Who Handles High-risk AI Better?

ServiceNow Vs. SAP, do you want to know which of them handles high-risk AI more effectively? Both SAP and ServiceNow protect high-risk AI systems, and both ServiceNow and SAP recognize the critical importance of responsible AI development, deployment, and governance.

ServiceNow

AI governance in ServiceNow and oversight excel in managing AI systems across enterprise workflows and IT operations. If your high-risk AI involves automated decision-making in service delivery, HR, or IT operations where workflow integrity and regulatory compliance are paramount, use ServiceNow for IT service management and to provide a dedicated, structured framework for managing those AI risks from intake through continuous monitoring.

They are perfect for organizations that want a clear ServiceNow AI audit trail and proactive risk management for AI applications.

Choose ServiceNow if your main goal is robust AI governance, risk management, and compliance across your enterprise IT and workflow landscape, especially for AI systems that might influence employees, customers, or services.

SAP

Whereas ServiceNow protects high-risk AI systems, SAP excels in the embedded management of AI risks within core business processes, especially finance, supply chain, and procurement.

If your high-risk AI involves financial transactions, fraud detection, demand forecasting, or supply chain optimization, where data integrity, financial compliance, and operational resilience are critical.

In that case, deep integration of SAP in the ERP systems offers strong, context-specific AI risk alleviation. They are strong for companies that want AI to directly handle risk within their business operations.

Technical Safeguards for High-risk AI

ServiceNow integrates robust technical safeguards into its platform to reduce the risks associated with high-impact AI applications. These controls help ensure that AI behaves as expected, remains fair over time, and can be audited and understood by both technical and non-technical stakeholders.

1. Drift Detection and Continuous Monitoring:

AI models can deteriorate over time due to changes in data or real-world circumstances. These AI systems continuously monitor performance and flag deviations that occur in the AI’s accuracy or behavior, ensuring ongoing reliability.

2. Explainability and Model Transparency:

To maintain the employee trust and accountability factor, ServiceNow ensures the understanding of what AI functions are and how they make decisions, going beyond the “black box” to reveal the logic and data drivers.

3. Tracking Audit Logs and Decisions:

Every action, input, and output of the AI is recorded. The audit logs enable the complete reversal of any decision, which is crucial for regulatory compliance, assigning accountability, and forensic analysis.

Global AI Regulation Compliance

Strict rules and compliance policies for high-risk imposing AI systems have been regulated by the government and regulatory bodies. ServiceNow ensures that the AI services they offer meet the continuously evolving global regulatory landscape. Key regulatory frameworks include:

1. EU AI Act

ServiceNow implementation services incorporate classified risks, norms for documentation, and clarity features in tune with the EU AI Act’s stringent conditions for high-risk AI.

2. NIST AI Risk Management Framework (USA)

ServiceNow protects high-risk AI systems by following NIST guidelines for trustworthiness, risk identification, and continuous monitoring of AI systems.

3. OECD AI Principles & ISO/IEC 42001

ServiceNow protects high-risk AI systems by aligning with international standards for responsible AI. It reinforces fairness, explainability, and accountability in its AI ecosystem.

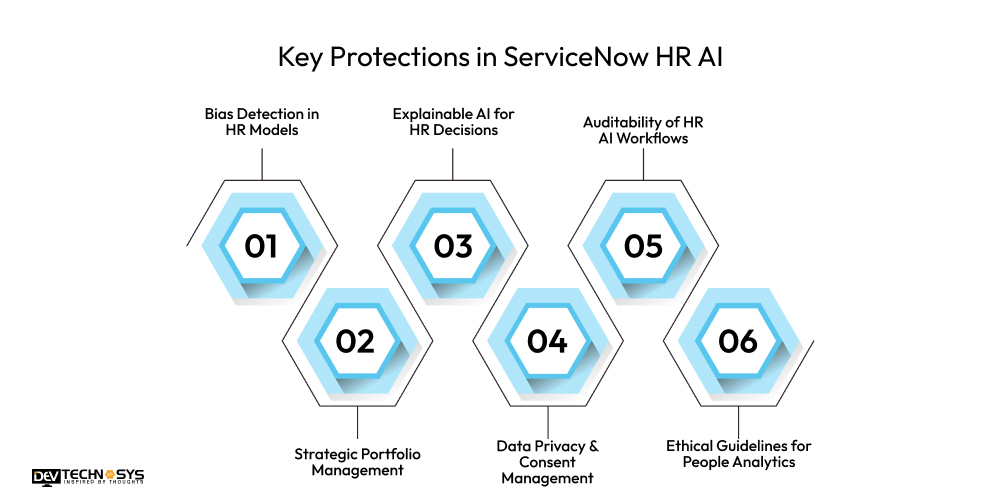

Key Protections in ServiceNow HR AI

Artificial intelligence (AI) in HR service delivery can significantly improve efficiency. Yet, it also carries high risk when used for HR tasks. ServiceNow’s HR Service Delivery (HRSD) platform handles these risks with the help of built-in safeguards tailored to maintain fairness while keeping sensitive employee data secured.

1. Bias Detection in HR Models

ServiceNow uses fairness and bias evaluation tools to detect and mitigate discrimination in AI models used for recruitment, internal mobility, or compensation analytics.

2. Strategic Portfolio Management

ServiceNow integrates advanced AI across its platforms, including HR Service Delivery (HRSD) and strategic portfolio management (SPM). These areas include sensitive decisions regarding people and enterprise investments, making them high-risk zones for AI usage.

3. Explainable AI for HR Decisions

Any AI-generated insights, such as candidate rankings or employee engagement scores, are accompanied by transparent explanations, enabling HR leaders to make informed, human-in-the-loop decisions.

4. Data Privacy & Consent Management

Employee data is handled in strict compliance with GDPR, HIPAA, and internal company policies. Consent mechanisms and role-based access control are applied rigorously.

5. Auditability of HR AI Workflows

Every AI interaction in the HR system is logged and traceable, supporting internal audits, compliance reviews, and employee appeals.

6. Ethical Guidelines for People Analytics

ServiceNow for HR enables organizations to establish boundaries for AI usage, preventing overreach in areas such as productivity surveillance or predictive attrition scoring.

Security and Infrastructure Protection

ServiceNow secures high-risk AI systems with enterprise-grade infrastructure, privacy controls, and robust access management.

1. Data Privacy and Encryption

ServiceNow Support Services for data privacy maintenance utilize AES-256 encryption at rest and TLS 1.2+ in transit, ensuring data protection across all layers. Data residency and tenant isolation support compliance with global privacy laws, such as the GDPR.

2. Secure Development Lifecycle (SDLC)

Security is embedded throughout ServiceNow’s development process, including threat modeling, automated code scanning, and regular penetration testing, to ensure AI features are secure by design.

3. Role-Based Access Control (RBAC)

RBAC enforces the least privileged access to AI tools and data. Granular permissions, audit trails, and SoD (Segregation of Duties) help prevent misuse and ensure accountability.

Managing Risk Beyond Security

In this AI-leading world, ServiceNow is changing the worldview of risk management in businesses. With the focus on AI inventory, lifecycle management, and compliance overview, Businesses gain complete visibility and control over the usage of Artificial intelligence by making sure of ethical practices and accountability during the entire process.

Additionally, ServiceNow’s new Digital Operational Resilience Management (DORM) solution extends the reach of risk management far beyond security, empowering businesses to tackle operational disruptions, compliance challenges, and emerging risks that they may encounter in today’s complex digital terrain.

According to a top Servicenow development company, these key solutions enable companies to protect their digital assets and improve the resilience of operations. By taking care of a broad range of risks, from data integrity to business continuity, ServiceNow enables companies to keep up with the evolving threats and keep their critical applications running smoothly.

The Future of Safe AI in ServiceNow

ServiceNow is proceeding towards a bright future with the integration of AI services. ServiceNow utilizes the power of AI to ensure safety, transparency, and accountability in every stage of development. With the advancement of AI in core business tasks, ServiceNow is enhancing its capabilities with stronger human-in-the-loop controls, risk detection, and increased transparency.

The platform closely follows emerging global regulations to ensure ongoing regulatory compliance. ServiceNow delivers AI that drives innovation while ensuring security, fairness, and reliability by setting the focus on explainability, auditability, and user control.

Conclusion

As AI becomes increasingly integrated into enterprise workflows, it is essential to ensure high security and effective management. ServiceNow employs a proactive and comprehensive strategy for defending high-risk AI systems.

It includes integrating responsible design practices and ethical governance frameworks along with robust technical safety measures and regulatory compliance.

Whether it is used for HR-related work or other critical fields, ServiceNow ensures that AI functions securely and effectively. Hire ServiceNow developers from Dev Tech to bring secure, AI-powered innovation to your enterprise, and stay tuned for more informative updates.

FAQs

1. What Qualifies as a High-Risk AI System in ServiceNow?

High-risk AI systems are those used in critical decision-making domains, such as recruitment, employee performance assessment, financial strategy development, or any procedure that has a significant impact on individuals or entities. These systems need improved supervision, equity, and transparency.

2. How Does ServiceNow Ensure Ethical Use of AI?

ServiceNow operates within a Responsible AI framework guided by ethical values such as impartiality, transparency, accountability, and privacy. This encompasses explainable AI, bias detection, and decision-making processes that involve human oversight.

3. What Technical Safeguards Does ServiceNow Implement for AI?

Key safeguards include encryption, drift detection, audit logs, access controls (RBAC), and continuous model monitoring. They guard against data breaches, unauthorized access, and improper model behavior.

4. How Does ServiceNow Comply With Global AI Regulations?

ServiceNow aligns with significant regulatory frameworks, including the GDPR, HIPAA, the EU AI Act, and the NIST AI Risk Management Framework. It includes processes that are audit-ready and designed with privacy in mind.